(Editor’s note: A version of this article was previously published on n8n.blog)

For early and growth stage startups, SEO is often the most cost-effective way to build visibility and drive pipeline. But manually monitoring search results and analyzing competitors can eat up precious time and lead to inconsistent insights. Automating SERP analysis with n8n, SerpApi, and OpenAI gives your team a repeatable process to collect data, analyze competitors, and generate actionable insights at scale. This guide walks through a ready-to-use workflow that replaces tedious manual research with automation that saves time and helps you stay ahead.

Key takeaways

- Automate SERP data collection for both desktop and mobile to capture a complete picture.

- Crawl top competitor pages and extract content for deeper keyword and topic insights.

- Use OpenAI to generate summaries, long-tail keywords, and n-gram analysis.

- Persist results in Google Sheets for easy collaboration, reporting, and iteration.

- Scale competitive research and content planning without adding headcount.

Automate SERP Analysis with n8n & SerpApi

Monitoring search engine results pages (SERPs) at scale is essential for modern SEO teams. This post walks through a ready-to-use n8n template that automates SERP data collection, crawls top-ranking pages, extracts content, runs competitor analysis with OpenAI, and saves results to Google Sheets.

Why automate SERP analysis?

Manually checking search results is slow, inconsistent, and error-prone. An automated SERP analysis workflow helps you:

- Collect desktop and mobile SERP data simultaneously

- Identify top-ranking pages and FAQs from the search results

- Crawl and extract page content for deeper competitor analysis

- Generate summaries, target keywords, and n-gram reports with OpenAI

- Persist structured data in Google Sheets for collaboration and reporting

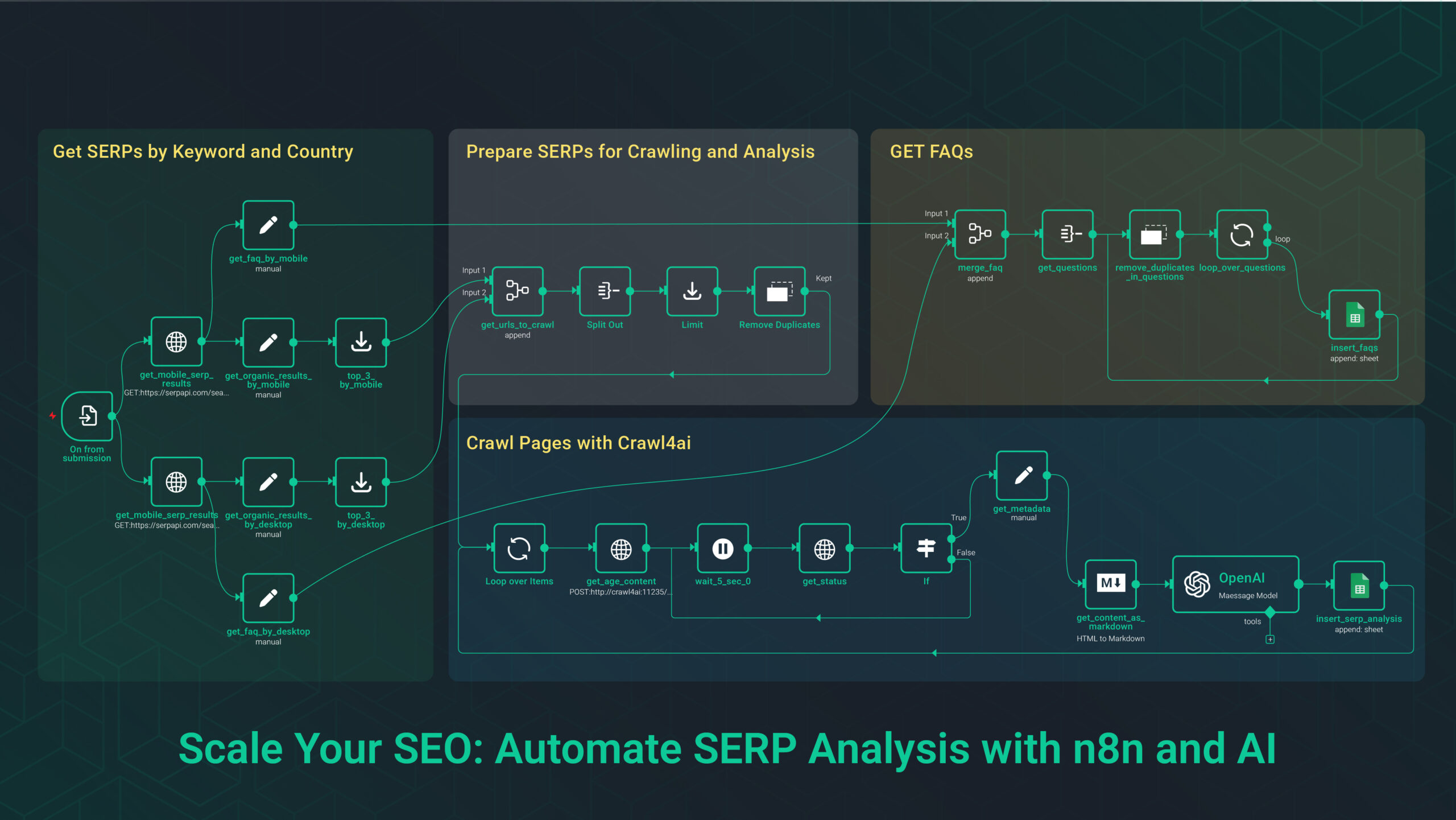

Template overview

The provided n8n template (“SERP Analysis Template”) uses a simple, modular pipeline that you can customize quickly. Key components include:

- Form Trigger: Start the workflow by submitting a focus keyword and country.

- SerpApi requests: Fetch desktop and mobile SERP results using SerpApi.

- Prepare results: Extract organic results and related questions (FAQs).

- Limit & dedupe: Limit top results and remove duplicate URLs before crawling.

- Crawl4ai: Queue top-3 results for page crawling and HTML cleaning.

- OpenAI analysis: Convert cleaned HTML to markdown and analyze competitor articles for summaries, focus keywords, long-tail keywords, and n-grams.

- Google Sheets: Write the SERP analysis and FAQs into separate sheets for easy review.

Step-by-step walkthrough

1. Start with a form trigger

The workflow begins with a form that collects two inputs: Focus Keyword and Country. This keeps the workflow flexible and allows team members to trigger new analyses without modifying the flow.

2. Query SerpApi for desktop and mobile

Two HTTP Request nodes call SerpApi with device=DESKTOP and device=MOBILE. This ensures you capture both desktop and mobile SERPs — important because rankings and rich features differ by device.

// Example query params

q = "={{ $json['Focus Keyword'] }}"

gl = "={{ $json.Country }}"

device = "DESKTOP" or "MOBILE"

3. Extract organic results & related questions

Use n8n Set nodes to map the organic_results and related_questions from SerpApi responses. Merge mobile + desktop FAQs and then de-duplicate both URLs and questions to keep only unique items.

4. Limit top results and merge lists

To control cost and processing time, the template caps the number of pages to crawl (for example, top 3 results from each device). The Limit node plus Remove Duplicates protects against duplicate crawling.

5. Crawl pages with Crawl4ai

Each unique URL is sent to a /crawl endpoint powered by Crawl4ai. After submitting crawl jobs, the workflow periodically polls the task status and, once completed, pulls result.cleaned_html and metadata (title, description, canonical URL).

6. Convert to Markdown and analyze with OpenAI

A Markdown conversion node converts cleaned HTML into readable text. Then the OpenAI node (configured as a content-analysis assistant) receives the article text and outputs:

- Short summary

- Potential focus keyword

- Relevant long-tail keywords

- N-gram analysis (unigrams, bigrams, trigrams)

7. Save results to Google Sheets

Finally, analysis results are appended to a Google Sheet named SERPs, with columns for position, title, link, snippet, summary, keywords, and n-grams. FAQs are appended to a separate sheet for easy FAQ mining.

Best practices and tips

Secure your API keys

Store SerpApi, Crawl4ai, OpenAI, and Google credentials in n8n credentials. Never hard-code keys in nodes or public templates.

Start small — set a limit

When testing, use the Limit node to cap crawled pages (for example, 3 items). This reduces API costs and speeds up iteration.

Respect rate limits and bot policies

Check SerpApi and Crawl4ai rate limits. Use sensible delays (the workflow includes a 5-second wait loop) to avoid throttling and to stay compliant with site robots.txt.

De-duplicate early

Remove duplicate URLs and duplicate FAQ questions before crawling or writing to Sheets — this saves tokens and simplifies analysis.

Customize the OpenAI prompt

Tune the OpenAI system and prompt instructions to match your desired output format and depth of analysis. Consider returning JSON for easier parsing into Sheets.

Troubleshooting checklist

- No results from SerpApi: verify query parameter formatting and your SerpApi plan limits.

- Crawl tasks stuck: ensure the Crawl4ai endpoint is reachable from n8n and your task polling logic matches the API response structure.

- Duplicate entries in Sheets: double-check the Remove Duplicates node configuration and the field used to compare (URL or question text).

- OpenAI analysis fails or times out: reduce input size by trimming HTML or only sending article main content.

Practical use cases

This automation is useful for:

- Competitive content research — quickly summarize top-ranking articles and extract keywords

- Article briefs — generate focused outlines based on competitor n-grams and long-tail queries

- FAQ and schema opportunities — gather common questions to optimize content for People Also Ask or FAQ schema

- Ranking monitoring — run scheduled comparisons for target keywords and export to Sheets

Implementation checklist

- Import the n8n template into your instance.

- Configure credentials: SerpApi, Crawl4ai, OpenAI, Google Sheets.

- Create Google Sheets with the recommended columns (SERPs and FAQs).

- Test using a single keyword and Country=us or de, and set the Limit node to 1–3.

- Review the sheet output and iterate on the OpenAI prompt for better results.

Get started with York IE

Automating SERP analysis with n8n, SerpApi, Crawl4ai, and OpenAI turns manual research into a repeatable, scalable process. The template gives you a launchpad to collect SERP data, crawl competitors, and generate actionable analysis stored in Google Sheets for easy team access.

In today’s competitive search landscape, speed and precision make all the difference. Automating SERP analysis transforms a time-consuming manual process into a reliable workflow that consistently delivers competitor insights, keyword opportunities, and actionable content ideas. For startups looking to maximize limited resources, this automation provides leverage: it frees your team from repetitive research so they can focus on creating the content and strategies that drive growth. Start small with a single keyword workflow and scale it into a powerful engine for SEO and market intelligence.